Data Center Cooling Infrastructure

With the growing adoption to modern technology by means of smartphones, personal computers & tablets there has been increased demand for the data centre processing worldwide. For every handheld computing device attached to the cloud, a data center is processing the required information resulting in the growth of data centers and is expected to continue at the rate of 14% per year*. To meet to these challenge the data center designers and operators are struggling to have optimum designs that can conserve energy.

Over the past several years Computational Fluid Dynamics (CFD) has proven to be a reliable tool to model data centers and is is gaining popularity to study the performance of cooling of the aisles and racks in a data center. Through thermal mapping with the help of CFD one can can expose problem areas (hotspots) that otherwise don’t show up in simple heat and flow balance calculations. In this blog we shall get introduced to the general data centre infrastructure before proceeding to the CFD modeling approach of data centers with a commercial software (in next part).

A data center is a dedicated space where companies can store and operate most of the Information and communications technology (ICT) infrastructure that supports their business. These can be the servers and storage equipment that run application software and process and store data and content. For some companies this might be a simple cage or rack of equipment while for others can be a room housing a few or many cabinets, depending on the scale of their operations. The space can typically have a raised floor with cabling ducts running underneath to feed power to the cabinets and carry the cables that connect the cabinets together.

The data center environment is controlled in terms of factors such as temperature & humidity, to ensure both the performance and the operational integrity of the systems within. The facilities generally include power supplies, backup power, chillers, cabling, fire and water detection systems and security controls. Data centers can be in-house, located in a company’s own facility, or outsourced with equipments being collocated at a third-party site. Outsourcing does not necessarily mean relinquishing control of your equipment and can be as simple as finding the right place to house that equipment.

Types of Data Center:

There are several types of data centers, the different classification of data center are as given below:

1. Based on application

- Public cloud providers : e.g. Amazon, Google

- Scientific computing centers : e.g. National laboratories

- Co-location centers : e.g. Private ‘clouds’ where servers are housed together

- In-house data centers : Facilities owned and operated by company using the servers

2. Based on Cooling System Design

- Raised floor data center

- Non raised floor data center

3. Based on infrastructure design topologies (Tier standard)

- Tier-1 : Basic Data Center

- Tier-2 : Redundant Data Center

- Tier-3 : Redundant Data Center (with concurrent maintenance)

- Tier-4 : Fault-Tolerant Data Center

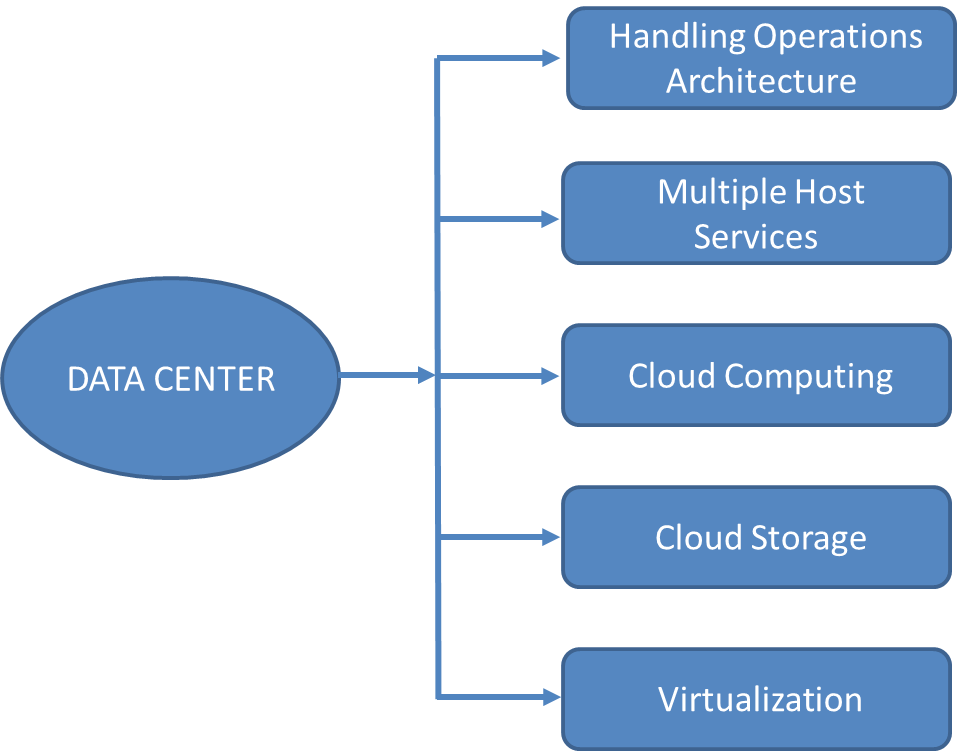

Applications of data center:

Data center storage applications are mission critical at every juncture, requiring the highest performance and reliability available. A schematic diagram of data center applications is shown below:

1. Provide multiple hosts services

- Data bases

- File servers

- Application servers

2. Offsite backups

3. Virtualization

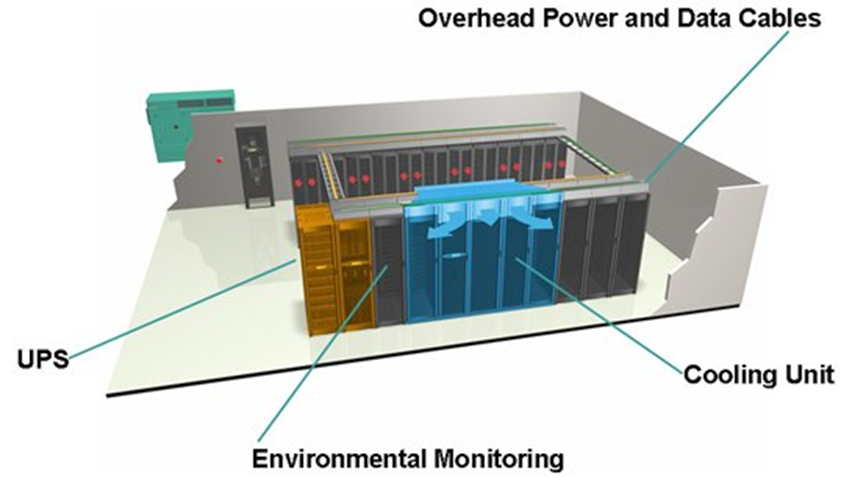

Data center components:

Different components of a data center are as given below

- CRAC- Computer Room Air Conditioning unit is a device that monitors and maintains the temperature, air distribution and humidity in a network room or data center.

- RACK- A rack is a standardized frame or enclosure for mounting multiple equipment modules.

- PDUs and UPS : A PDU is a power distribution unit designed to fit into a server rack in either a vertical or horizontal position.

- Perforated Tiles and Ceiling Grills- Perforated tiles are placed beneath computer systems to direct conditioned air directly to them.

- Duct and Diffusers

- Fans

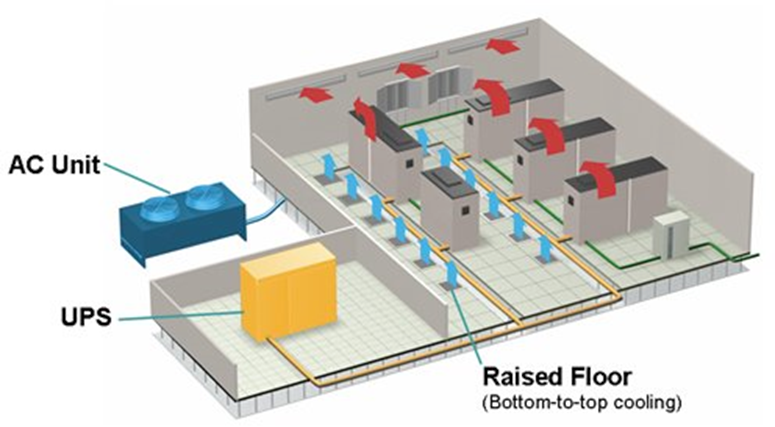

Data center room layout:

There are two basic ways to implement a Data Center room.

- Raised floor

- Non raised floor

A typical data center layout of both the configuration is shown below:

Computer room air conditioning (CRAC):

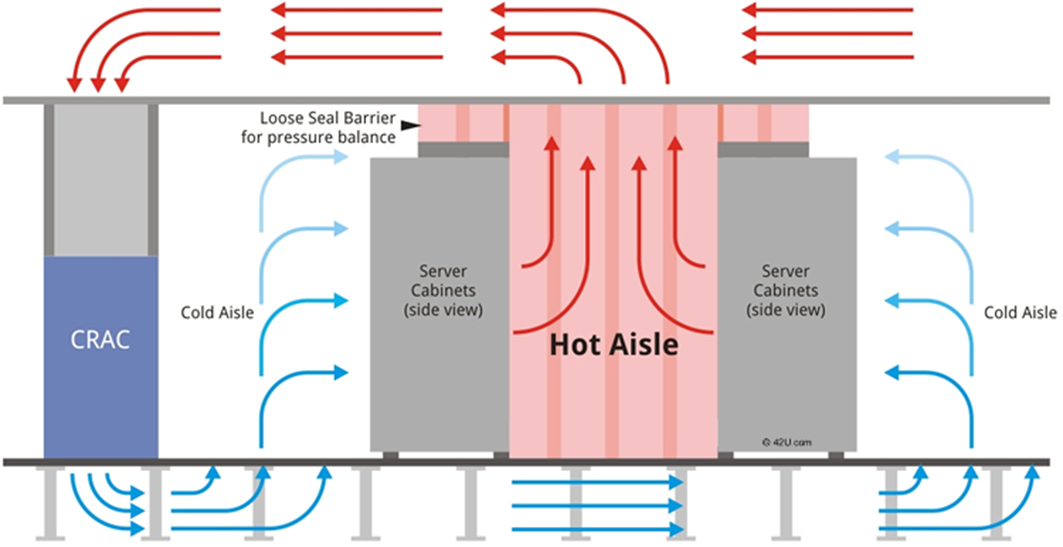

CRAC units are replacing air-conditioning units that were used in the past to cool data centers. There are a variety of ways that the CRAC units can be situated. One of the CRAC setup that has been successful, is the process of cooling air and having it dispensed through an elevated floor. The air rises through the perforated sections, forming cold aisles and the cold air flows through the racks where it picks up heat before exiting from the rear of the racks. The warm exit air forms hot aisles behind the racks, and the hot air returns to the CRAC intakes, to be positioned above the floor. There are different types of CRAC which can be classified on different basis, as a few listed below:

1. Based on heat removal method

- Air-cooled CRAC

- Glycol-cooled CRAC

- Water-cooled CRAC

2. Based on physical arrangements

- Ceiling Mounted

- Floor Mounted

3. Based on flow direction

- Top Flow

- Down flow

- Front Flow

PDU and UPS:

A power distribution unit is a device for controlling electrical power. The most basic PDU is a large power strip without surge protection. It is designed to provide standard electrical outlets for data center equipment and has no monitoring or remote access capabilities. In a data center, however, floor-mounted and rack-mounted PDUs are more sophisticated. The data provided by a PDU can be used for power usage effectiveness (PUE) calculations.

An Un-interruptible Power Supply (UPS) is a device that allows the computer to keep running for at least a short time when the primary power source is lost. It also provides protection from power surges. A UPS has its own rechargeable battery which provides emergency power for your system immediately when the mainsupply is cut, thereby preventing data loss. In the event of a sustained power failure, the UPS will provide sufficient battery power for your files to be saved and for the whole system to be shut down in an orderly manner. If an alternative power source such as a generator is available, the battery will provide sufficient power to keep your system running until the secondary supply is brought online. UPS's also filter the power supply entering your computer system, limiting the detrimental effect of "spikes", "noise" and other electrical disturbances. There are three basic types of UPS systems:

- Standby Power System (SPS)

- Line Interactive

- Double Conversion On-line UPS

Thermal Considerations for Data Center:

Hot and Cold aisle:

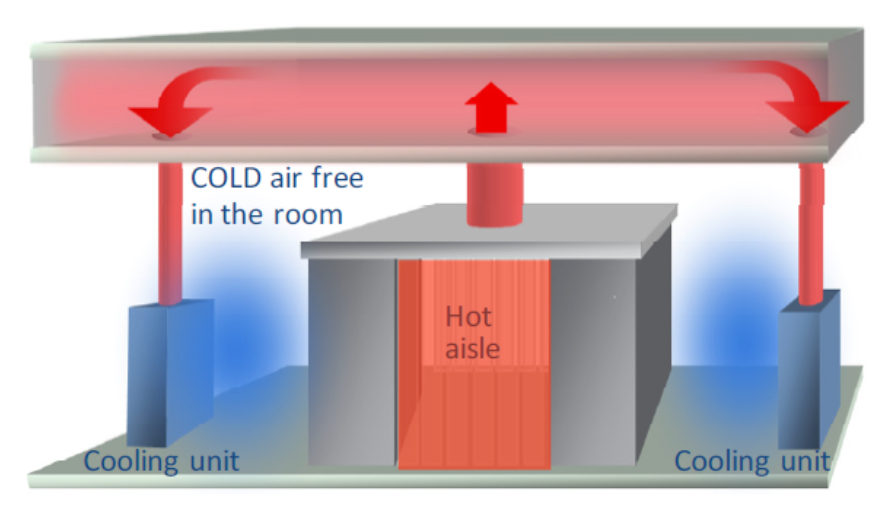

As most of the IT equipments breathe from front to rear the hot/ cold aisle aligns the cabinets in rows with the server exhausts of each row facing one another, becoming the hot aisle. Hot aisle containment immediately captures server exhaust air and restricts its entry to the rest of the data center. The exhaust air's destination depends on the containment configuration. Energy intensive IT equipment need good isolation of “cold” inlet and “hot” discharge. Computer room air conditioner airflow can be reduced if no mixing occurs. Overall temperature can be raised in the data center if air is delivered to equipment without mixing. Coils and chillers are more efficient with higher temperature differences

- Hot aisle:

The goal of hot aisle containment is to capture the hot exhaust from IT equipment and direct it to the CRAC as quickly as possible. Mainly two types of systems achieve containment: the "room" or the "chimney". Similar to cold aisle containment, the "room" method seeks to separate the hot aisle with barriers made from curtains, or metal enclosures. The "chimney" method uses special server racks with chimneys to direct hot exhaust into the return air system or overhead plenum. Hot aisle containment is an excellent option for new data center builds and those with existing hot air return ducts or over-ceiling plenum space.

- Cold aisle:

As the name implies, cold aisle containment attempts to isolate the cold air in a "room" of its own. By using containment curtains, metal, or other similar barrier, the cooling air is concentrated at the equipment intake. The cold air must pass through the server racks, cooling the equipment, before entering the rest of the room. Clearly, the focus of cold aisle containment is to cool the IT equipment, not the whole room, with targeted cooling at the equipment inlet. Cold Aisle containment can be a cost effective approach for older data centers with open-room hot air return schemes.

ASHRAE :

ASHRAE (American Society of Heating, Refrigerating, and Air-Conditioning Engineers) is an organization devoted to the advancement of indoor-environment-control technology in the heating, ventilation, and air conditioning (HVAC) industry. One of the most important functions of the organization is to promote research and development in efficient, environmentally friendly technologies. The temperature and humidity in a data center is controlled through air conditioning. ASHRAE's "Thermal Guidelines for Data Processing Environments" recommends a temperature range of 18–27 °C (64–81 °F), a dew point range of 5–15 °C (41–59 °F), and a maximum relative humidity of 60% for data center environments.

Below is a informative short video that would take you to Microsoft GFS Datacenter tour:

http://www.youtube.com/watch?v=hOxA1l1pQIw

References

- http://en.wikipedia.org/wiki/Data_center

- http://www.coolsimsoftware.com/WhitePapers.aspx

- http://www.coolsimsoftware.com/Portals/0/PDF/TN101_CoolSim4_EnergyCalculator.pdf

- http://www.datacenterjournal.com/facilities/cfd-for-data-centers-driving-down-cost-and-improving-ease-of-use/

- http://www.apcmedia.com/salestools/TEVS-5TXPED/TEVS-5TXPED_R3_EN.pdf

- http://www.thegreengrid.org/~/media/WhitePapers/Qualitative%20Analysis

The Author

{module [317]}